It is important to attack strategies on Machine Learning. At a higher level, attacks against classifiers comprise of three types :

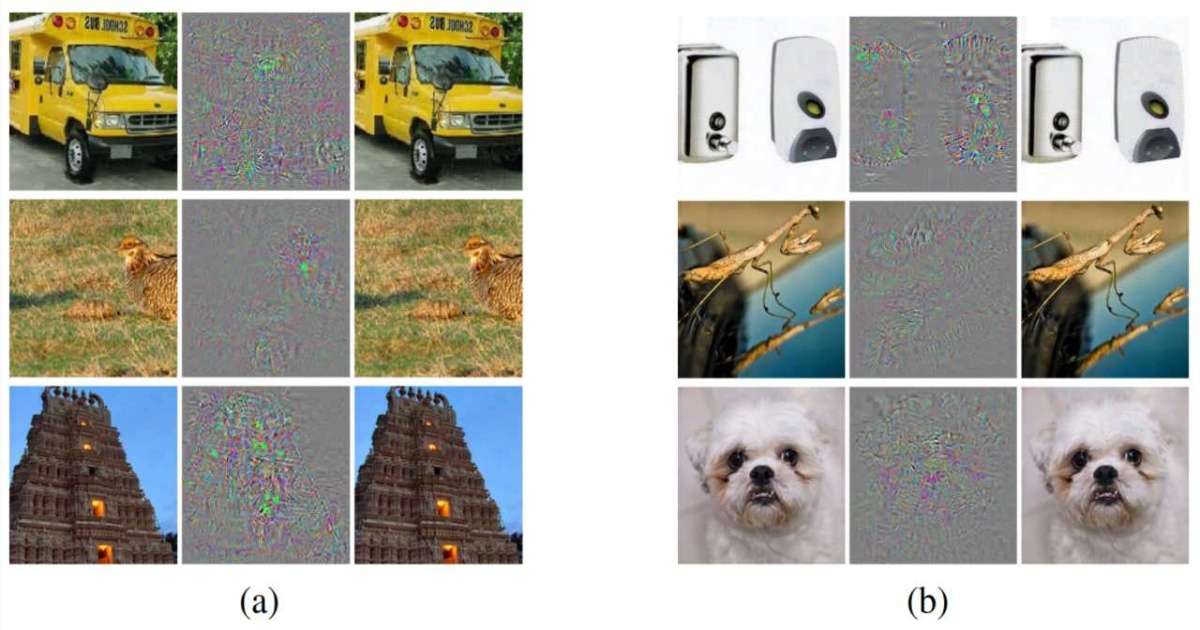

Adversarial inputs :

Which are specially crafted inputs that are developed to be reliably misclassified to evade detection? Adversarial inputs include malicious documents designed emails attempting to evade spam filters.

Limit information leakage

The goal here is to confirm that attackers gain as little insight as possible once they are probing your system. It is important to attack strategies on Machine Learning. Also it’s important to stay the feedback minimal and delay it the maximum amount as possible. For instance avoid returning detailed error codes or confidence values.

Attack strategies on Machine Learning by Limit probing

The goal of this strategy is to block attackers by limiting how often many payloads they’ll test against your systems. By restricting what quantity testing an attacker can perform against your systems. Further you’ll effectively reduce the speed at which they’ll devise harmful payloads.

The side effect of such active rate limiting is that it creates an incentive for bad actors to form fake accounts. Further they use compromised-user computers to diversify their pool of IPs. The widespread use of rate-limiting through the industry is a major driving factor. But the increase of very active black market forums where accounts and IP addresses, as visible within the screenshot above.

Ensemble learning

Lastly, it’s important to mix various detection mechanisms to create it harder for attackers to bypass the system. Using ensemble learning to mix different sorts of detection methods, like reputation-based ones. It is important to attack strategies on Machine Learning. So AI classifiers, detection rules, and anomaly detection improve the robustness of your system. Because bad actors should craft payloads that avoid all those mechanisms without delay.

Data poisoning attacks :

Where the attacker attempts to pollute training data in such the simplest way. But the boundary between what the classifier categorizes nearly as good data. And what the classifier categorizes as bad, shifts in his favor. It is important to attack strategies on Machine Learning. The second quite attacks we observe within the wild is feedback weaponization. It attempts the feedback mechanisms during a trial to control the system toward misclassifying good content as abusive.

Use sensible data sampling

You need to confirm that a little group of entities. Also this includes IPs or users, can’t account for an oversized fraction of the model training data. Particularly, take care to not over-weighting false positives and false negatives that users report. It is important to attack strategies on Machine Learning. This achieves through limiting the number of examples that every user can contribute. Also through using decaying weights supported the number of examples reported.

Compare you’re newly trained

To estimate what quantity changes. For instance, you’ll perform a dark launch and compare the 2 outputs on the identical traffic.

Build a golden dataset

That your classifier must accurately predict to be initiated production. This dataset ideally contains a collection of curated attacks and normal content that are representative of your system. It is important to attack strategies on Machine Learning. Also this process makes sure to able to detect a weaponization attack. This generates a big regression in your model before it negatively impacted your users.

Model stealing techniques :

Accustomed “steal” (i.e., duplicate) models. For instance, to steal stock exchange prediction models and spam-filtering, models. To use them or be able to optimize more efficiently against such models.

Model reconstruction

The key idea here is that the attacker is in a position to recreate a model. Probing the general public API. Also gradually refining his model by using it as an Oracle. It is important to attack strategies on Machine Learning. It appears to be effective against most AI algorithms, including SVM, Random Forests, and deep neural networks.

Membership leakage

Here, the attacker builds shadow models. These enable him to work out whether a given record was accustomed to training a model.

All you need to know about Machine Learning

Learn Machine Learning

| Top 7 Machine Learning University/ Colleges in India | Top 7 Training Institutes of Machine Learning |

| Top 7 Online Machine Learning Training Programs | Top 7 Certification Courses of Machine Learning |

Learn Machine Learning with WAC

| Machine Learning Webinars | Machine Learning Workshops |

| Machine Learning Summer Training | Machine Learning One-on-One Training |

| Machine Learning Online Summer Training | Machine Learning Recorded Training |

Other Skills in Demand

| Artificial Intelligence | Data Science |

| Digital Marketing | Business Analytics |

| Big Data | Internet of Things |

| Python Programming | Robotics & Embedded System |

| Android App Development | Machine Learning |